LLM strategy: What to build and Where to focus

Published on: Oct. 17, 2023, 9:29 a.m.

What will happen

Future Large Language models (LLMs) will be multimodal, incorporating images and videos.

The current generation of LLMs are very powerful and can do many tasks. GPT4, GPT3.5 and many others like open source Llamas, Mistral etc. are solution to many problems.

Explosion of No-code or Low-code solutions for fine-tuning, RLHF, etc. and any other possible method in LLMs. These are often integrated with the cloud provider, Azure, AWS and Google Cloud.

Ludwig.ai and others will make customising LLMs “very” easy. (Low-code and no-code)

In the next six months to a year, there will be another wave of image breakthroughs like StableDiffusion models. In 1-2 years multimodal will become prevalent.

In the next six months to a year, prices of API to GPT4 and others will drop significant because there will be a lot of competition and many open source alternatives with minimal pricing.

Open source LLMs will boom. Many will get on the board, except OpenAI, Antropics, and others. who already got Microsoft and Amazon respectively.

In the next two years LLMs will be commodity. Whatever right now is “the thing” will be possible to plug in your software and use it.

Nvidia is undisputed king of GPU that runs all AI models. However, dependence on Nvidia is driving companies to build their own chips, Microsoft being a prime contender, a move Google has already initiated.

Despite these efforts, Nvidia will likely maintain its leadership for another three to four years, if not more. The chip manufacturing sector is notorious for its high entry barriers due to its complexity and limited availability of production facilities.

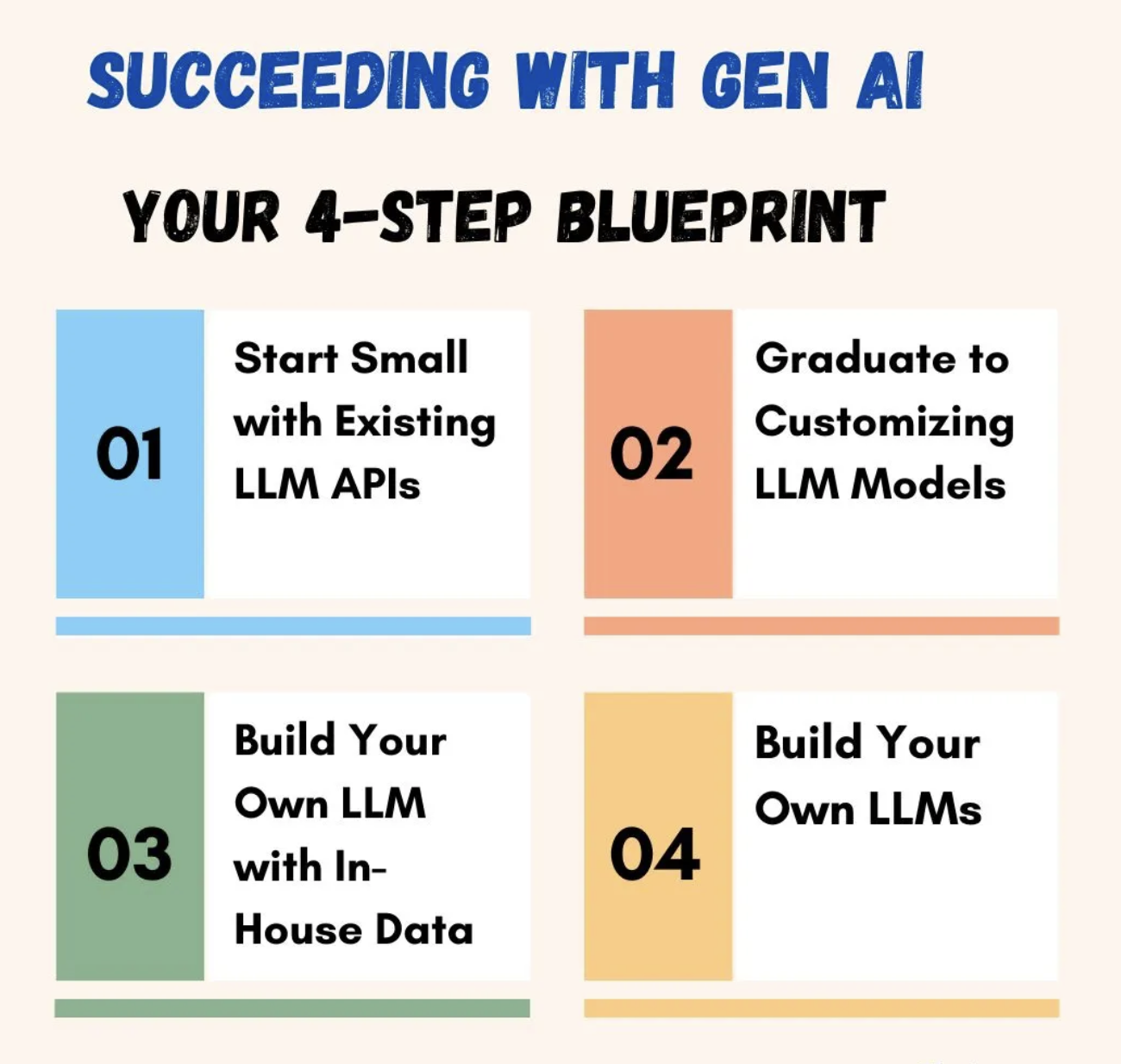

What to do or not (for SME)

While optimising LLM pipelines might seem an attractive endeavour, it's a common focus among many specialists. Unless you're in a large organisation or contributing to a significant open-source project, it's more smart to wait. Within six months to a year, many solutions will be readily available, either through your preferred cloud services or open-source platforms.

Do not even think of competing in building LLM from scratch. You are outnumbered but so big margins you are even unaware. And you don’t need to do it, as explained before. Very few companies in the world are doing it, and the number won’t get bigger soon.

Instead, invest time to play with fine-tuning, RLHF and other methods, they are here to stay, and can improve your model.

Architect your infrastructure to easily integrate various LLMs. LangChain is one of the possibilities. Solutions like LangChain are just one of many options. Prioritize software design that allows for seamless on-the-fly incorporation, as you'll find yourself doing it frequently.

To-do NOW

Find a problem, and build a solution with LLM. There are really a lot. From personal tutor for students, Q&A of your private data (I am doing that), an interview AI bot (another my project with MVP), … don’t do LLMs for coding, as believe me others (Microsoft and GitHub) will do it better and faster than you :) try to focus on many vertical problems which are there and can be solved and improved by LLMs.

The more niche the problem the better. You can find the people that need it and are willing to pay for it. After initial idea validation and making some money the space of similar problems and solutions is infinite.

Many, if not every organisation will need custom Q&A on private data. Everyone is building it too, but the market is unlimited. Customers will guide your unique value propositions and feature development. Remember that companies like HuggingFace did not start as GitHub for AI models, and Amazon did not start as Cloud company.

These are my thoughts. If you find something useful or you have some comments, I would be very happy to hear it. If you have an idea and want to work on it together feel free to contact me.